by Christoph Meyer

Could the mounting death toll, pain and costs from the coronavirus crisis have been prevented or at least lessened? Leaders and senior officials in the US and the UK have been accused of recklessly ignoring warnings, whilst Chinese officials even stand accused of suppressing them. The pandemic has been labelled the “worst intelligence failure in U.S. history” or UK’s “greatest science policy failure for a generation”. Future public inquiries will hopefully address not just questions of accountability but also, more importantly, which lessons should be learned.

Do Not Just Blame the Decision-Makers: Expert Warners Also Need to Do Better

However, even today there are striking parallels between the warning-response gap in conflict and mass atrocity prevention and the coronavirus crisis. Our extensive research on cases ranging from Rwanda 1994 to Crimea 2014 found a wide-spread tendency in the literature to overestimate the supply of “warnings” from inside and outside of government and to underestimate how difficult persuasive warning actually is. Warners are typically portrayed as altruistic, truthful and prescient, yet doomed to be ignored by irresponsible, ignorant and self-interested leaders. Imagine princess Cassandra of Troy trying to convince Mayor Vaughn from Jaws.

The first in-depth investigations of the decision-making on COVID-19 suggest that at least some of the warnings in the US case suffered from credibility problems whereas UK experts were criticised for not warning more forcefully, explicitly and earlier. This raises important questions about individual expert’s motivations, capabilities and strategies, but also about structural and cultural factors that can impede early, credible, actionable and, above all, persuasive warnings.

Expert Warners Need to Learn What the Obstacles Are for Their Messages to Be Heard

In our recent book we compare warning about war to the challenge of conquering an obstacle course against various competitors and often adverse weather conditions. The most successful competitors will be those who combine natural ability, high motivation, regular training, and risk-taking with a bit of luck. Many expert warners do not realise what the obstacles are, nor have they been trained to overcome them or are willing to take some of the professional risks involved in warning.

We found that the most effective warners tend to be those who (i) have acquired some personal trust as a result of previous personal contacts with decision-makers, (ii) can offer a positive professional reputation and track-record in their previous analysis and warnings, (iii) understand decision-makers’ agendas and “hot-buttons” (iv), share the same broad political or ideational outlook, and finally, (v) are willing to take some professional risks to get their message across.

Based on our research, we found that in order to increase their chances of being heard by decision-makers, expert warners should consider the following eight points.

1. Understand That Decision-Makers Work in a Completely Different Environment.

First and foremost, expert warners need to understand that senior officials inhabit a different world to themselves. Most experts tend to consume information from a relatively narrow range of quality sources focused on a specific subject area. They evaluate the quality of the method and evidence behind causal claims and, sometimes, the potential to solve a given problem. Warnings are relatively rare in this world. In contrast, decision-makers live in a world where warnings from different corners are plentiful and competing demands for their attention is constant and typically tied to requests for more government spending. They are trained to look for the interest behind the knowledge claim and are prone to see warnings as politically biased and potentially self-interested manipulation attempts. A New York Times investigation suggests, for example, that at least some of the coronavirus warnings were discounted as a result of perceived political bias regarding China.

2. Credibility Is Key to Who Is Being Noticed and Heard.

Even experts without an apparent or hidden agenda can and do contradict each other, including those working in the same field. On any given issue, there is rarely just one authoritative source of knowledge, but multiple individuals or organisations that supply knowledge. The cacophony gets greater when assessing the proportionality and unintended effects of the measures to control the disease, including the inadvertent increase of non-COVID deaths and severe loss of quality of life.

When politicians claim to be only following “expert advice” as was the case in the UK, they obscure necessary decisions about difficult trade-offs and dilemmas arising from diverse expert advice. Decision-makers need to decide whose advice to accept and to what extent. This is why credibility is key to who is being noticed and believed.

That means warners need to ask themselves whether they are likely to be perceived as credible or rather with suspicion by the people that ultimately take political decisions. If the latter is the case, they can try to target more receptive scientists sitting on official expert committee instead or organisations closer to decision-makers. They can publish pieces in news media likely to be consumed by politicians rather than those they might prefer themselves. Or they can boost their credibility by teaming up with others through open-letters or joint statements.

3. To Cut Through the Noise, You Might Have to Take Risks.

The next challenge is for warnings to stick-out from the everyday information and reporting “noise”. Officials may, for instance, choose an unusual channel or mode of communication. We know that ambassadors have used demarches as relatively rare and more formal formats to highlight the importance of their analysis rather than their routine reports.

Senior officials might cut through when they are ready to put their career and professional reputation on the line as Mukesh Kapila did when warning about Darfur in 2004 on BBC Radio 4. This lesson can also be drawn in the case of Capt. Brett E. Crozier who was fired after copying-in too many people into his outspoken warning about the spread of the virus on the aircraft carrier Theodore Roosevelt.

Many external experts as well as intelligence analysts, such as Professor for Epidemiology, Mark Woolhouse, are satisfied just to be “heard and understood”. They do not seek for their advice to be accepted, prioritised and acted upon. However, sometimes a more pro-active and risk-taking approach is needed as was arguably the case with COVID-19 according to Professor Jonathan Ball: “Perhaps some of us should have got up in front of BBC News and said you lot ought to be petrified because this is going to be a pandemic that will kill hundreds of thousands of people. None of us thought this was a particularly constructive thing to do, but maybe with hindsight we should have. If there had been more voices, maybe politicians would have taken this a bit more seriously.”

4. Spell Out a Range of Expected Consequences.

Given leaders’ constant need to prioritise, experts need to spell out the range of expected consequences. They need to dare to be more precise about what the likelihood is for something to happen, the timing, scale and nature of the consequences. Too often we found warnings to be rather vague or hedged. Similarly to the account by Balls, an in-depth Reuter’s investigation also suggests that UK ‘scientists did not articulate their fears forcefully to the government’ and could have spelled out the probable deaths involved earlier.

5. Focus on What Matters to Decision-Makers, Not Yourself.

Warners need to focus on what matters most to decision-makers, not to them. One of the most successful warnings we came across in our research on conflict warnings highlighted not just the humanitarian suffering, but also how this escalation would resonate with important domestic constituencies such as evangelical Christians and how it might harm electoral chances. NGOs focused on conflict prevention and peace may find it easier to make their case if they also highlight the indirect and less immediate effects of instability on migration, jobs and security.

6. Understand the Reference Points and Contexts That Decision-Makers Work With in Any Given Situation.

Warners should try to understand and, if necessary, challenge the cognitive reference points that underpin leaders’ thinking. In the area of foreign policy, decision-makers often draw on lessons learnt from seemingly similar or recent cases from the region. For instance, preventive action against ethnic conflict in Macedonia in 2001 benefited from fresh lessons learnt from the Kosovo conflict. Spelling out what precisely is similar or different in present threats in relation to lessons learnt from previous familiar cases can encourage decision-makers to question and change their beliefs.

In the current crisis, one reason why senior officials in Europe may have underestimated the danger of coronavirus was that their reference point and planning assumption was a flu epidemic. Many also still remembered that the UK was accused of overreacting to the much milder than expected swine flu in 2009. In contrast, leaders in many Asian countries had other more dangerous viruses as their cognitive reference points and underpinning their pandemic plans.

7. Know What Kind of Evidence and Methods Decision-Makers Trust the Most.

Experts should try to understand what kind of evidence and methods decision-makers and their close advisors consider the most credible. In foreign affairs, decision-makers often trust secret intelligence based on human sources more than assessment based on expert judgement using open-sources. Other decision-makers like indicators and econometric models as compared to qualitative expert judgements. If unfamiliar with these particular methods, they could seek to collaborate with those experts who are can translate their findings into the most suitable language.

8. Include Actionable Recommendations With the Warnings.

The best warnings are those that contain also actionable recommendations. Decision-makers are more receptive to warnings that give them information on which they can act today, ideally including a range of options. The influential model by the Imperial College team appeared to resonate so well not only because of the method they used, but also because it gave decision-makers a clear sense of how death rates might develop for different policy options under discussion.

The dilemma for warners is that they can undermine their own credibility by suggesting policy options that are considered politically unfeasible. According to a Reuters account, the lock-down measures adopted in China and Italy were considered initially inconceivable for the UK and thus not considered in-depth early on. Warners do need to resist a narrow understanding of what is feasible and sometimes need to push to widen the menu of policy options considered.

It Is Also up to Government Bureaucracies and Decision-Makers to Be Open for Warnings

All of this is not to deny that the key explanation may ultimately lie with unreceptive decision-makers who cannot deal with uncomfortable advice or who create blame-shifting cultures in which many officials just seek to cover their backs. We should approach any justification why leaders did not notice or believe a warning with a healthy dose of scepticism. Politicians can be expected to ring-fence at least some of their time to regularly consider new and serious threats to the security and well-being of citizens, regardless of distractions by media headlines. There should be clarity about who is responsible to act or not to act on warnings. They should ask probing questions of experts that bring them reassuring news to tease out key uncertainties and down-side risks as was allegedly lacking in the UK case. They need to ensure there is sufficient diversity in the advice they are getting through expert committees, create channels for fast-tracking warnings and opportunities for informally expressing dissent with prevailing wisdom. Leaders have a responsibility to build cultures in which high-quality warnings can be expressed without fear of punishment or career disadvantage.

One of the questions to be addressed in postmortems will be whether the relationship of politicians to the intelligence community in the US and to health professionals in the UK was conducive to timely warning and preventive action. Have experts allowed themselves to be politicised? Have they cried wolf too often and on too many issues? Or, conversely, have they been affected by group-think and hesitated to ask difficult questions sooner and more forcefully? Keeping expert warners in the picture matters greatly to learning the right lessons from the crisis. The best experts with the most important messages need to find ways of cutting through, regardless of who happens to sit in the White House, Downing Street, the Élysée Palace or the Chancellery. Only with the benefit of hindsight is warning and acting on it easy.

This article is a reposting of Christoph Meyer’s article with the kind cooperation of Peacelab, please follow this link to see the original.

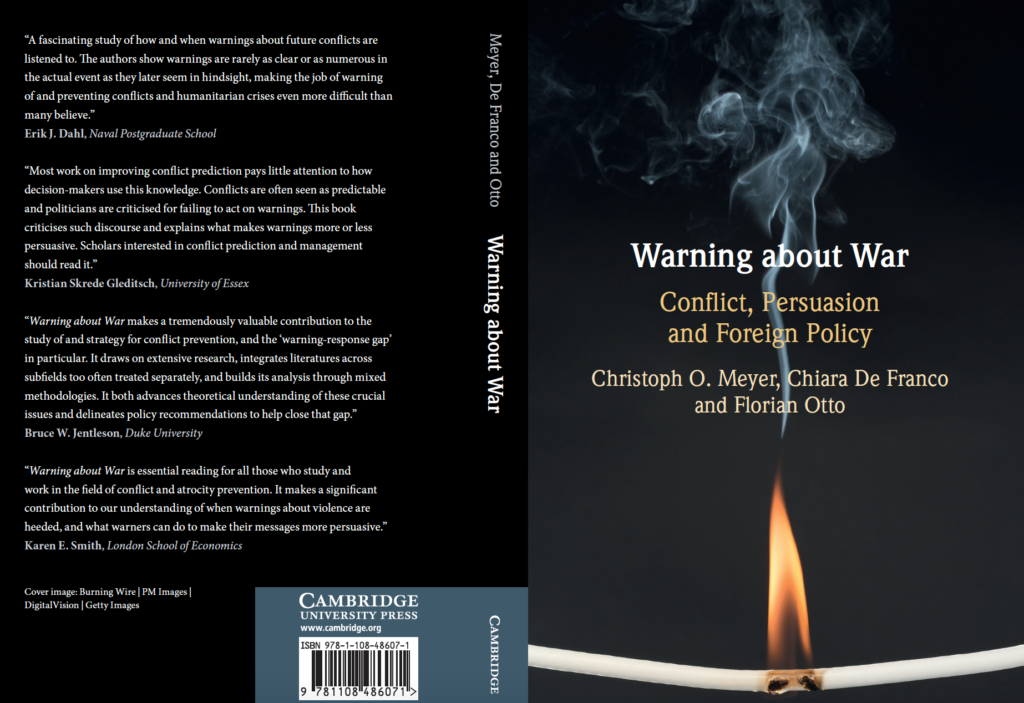

Christoph Meyer is the co-author (with Chiara De Franco and Florian Otto) of “Warning about War: Conflict, Persuasion and Foreign Policy” and leads a research project on Learning and Intelligence in European Foreign Policy. Support from the European Research Council and the UK Economic and Social Research Council is gratefully acknowledged.